Feature Selection/Elimination using Scikit-learn in Python

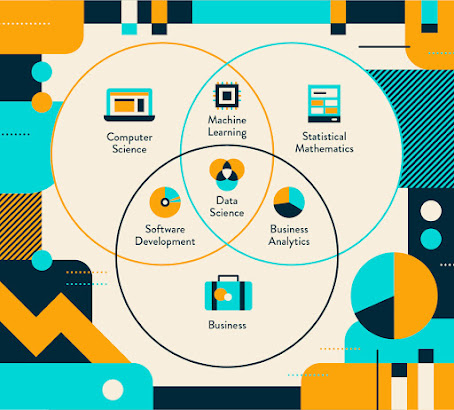

Feature Selection is one of the core concepts in machine learning that significantly influences the model's efficiency. The data features you use to train your models for machine learning have a significant effect on the efficiency you can achieve. Application engineering is the method of converting the knowledge obtained into features that best represent the problem we are attempting to solve, increasing the performance and consistency of the model.

- Univariate Selection

- Recursive Feature Elimination

- Principal Component Analysis

- Feature Importance

For feature selection/dimensionality reduction on sample sets, the classes in the sklearn.feature selection module can be used either to boost the accuracy scores of estimators or to maximize their output on very high-dimensional datasets.

Reduces Overfitting: Less redundant data allows less ability to make redundant data / noise-based decisions.

Improves accuracy: Less misleading details mean that the accuracy of modeling improves. Reduces time for training: Less knowledge ensures that algorithms learn quicker.

Feature Importance:

The relative value of each attribute can also be calculated by methods using decision-tree ensembles (such as Random Forest or Extra Trees). It is possible to use these essential principles to guide a feature selection process. The creation of an Extra Trees ensemble of the iris flowers dataset and the showing of the relative value of the element is seen in this recipe.

Recursive feature elimination (RFE):

Unlike the univariate method, RFE starts by fitting a model on the entire set of features and computing an importance score for each predictor. The weakest features are then removed, the model is re-fitted, and importance scores are computed again until the specified number of features are used. Features important score are ranked by the model’s coef_ or feature_importances_attributes, and by recursively eliminating a small number of features per loop.

Univariate Selection:

Based on univariate statistical tests, Univariate feature selection operates by choosing the right features. To an estimator, it can be seen as a preprocessing phase. Scikit-learn exposes the routines of function selection as objects that enforce the process of transformation:

- All but the top-ranking functions are omitted by SelectKBest.

- SelectPercentile excludes all features except the highest scoring percentage defined by the user.

- Using typical univariate statistical tests for each characteristic: SelectFpr false positive rate, SelectFdr false positive rate, or SelectFwe family-wise error.

Principal Component Analysis:

Principal Component Analysis is an important machine learning method for size reduction. And this method is sometimes called the Technique of Data Reduction. A property of the PCA is that in the transformed result, you have the option to choose the number of dimensions or key variables.

Colab link is here.

References:

No comments:

Post a Comment